What I Found

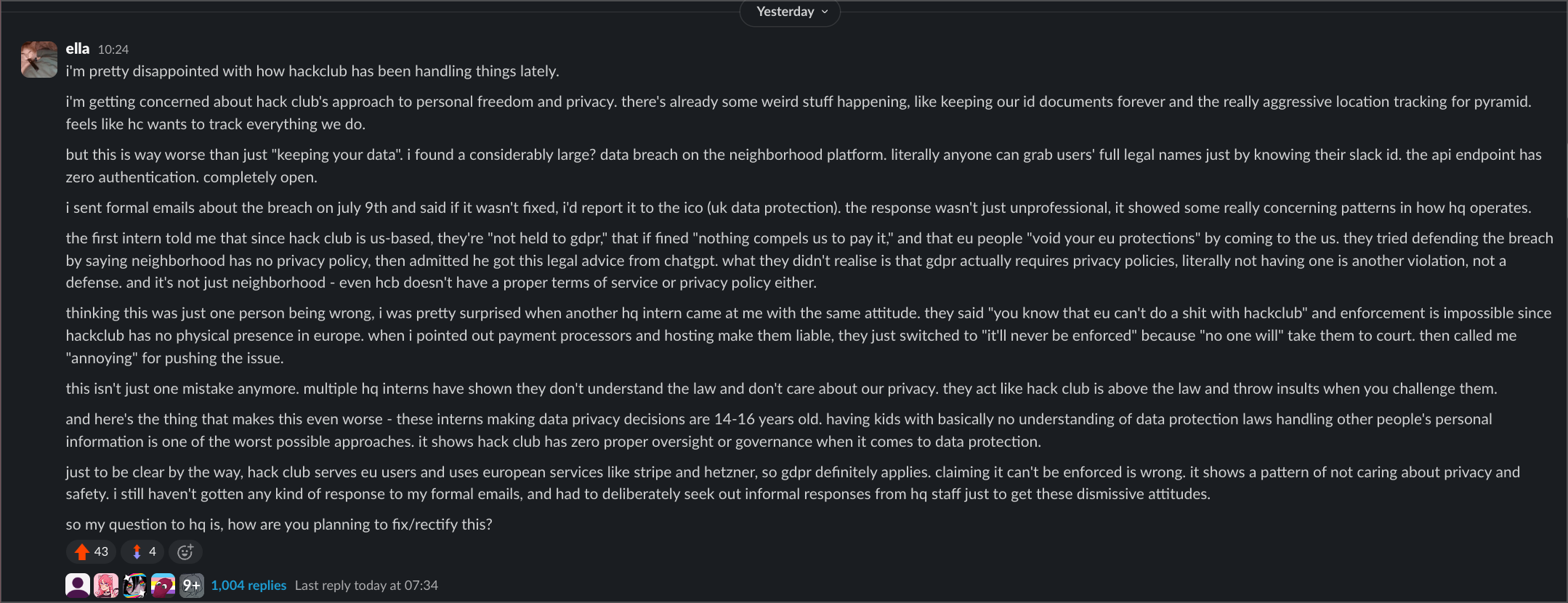

In July 2025, I discovered something pretty concerning about Hack Club, an organisation that serves thousands of young makers worldwide. I was just checking out their programmes when I stumbled across a bunch of serious data privacy issues that put our community at real risk.

The biggest issue was in their Neighbourhood programme, which offers housing in San Francisco to participants who complete coding projects. The platform had a completely unprotected API endpoint that exposed participants' full legal names to anyone with their Slack ID. No authentication required. Just a simple URL with someone's Slack ID would return their real name.

This wasn't a one-off either. Their Juice programme had leaked participants' names, phone numbers, and email addresses through an unprotected link. Past events like Highseas had similar vulnerabilities exposing user location data. The pattern was obvious: they keep building critical systems that handle sensitive data without any basic security measures.

The Concerning Response

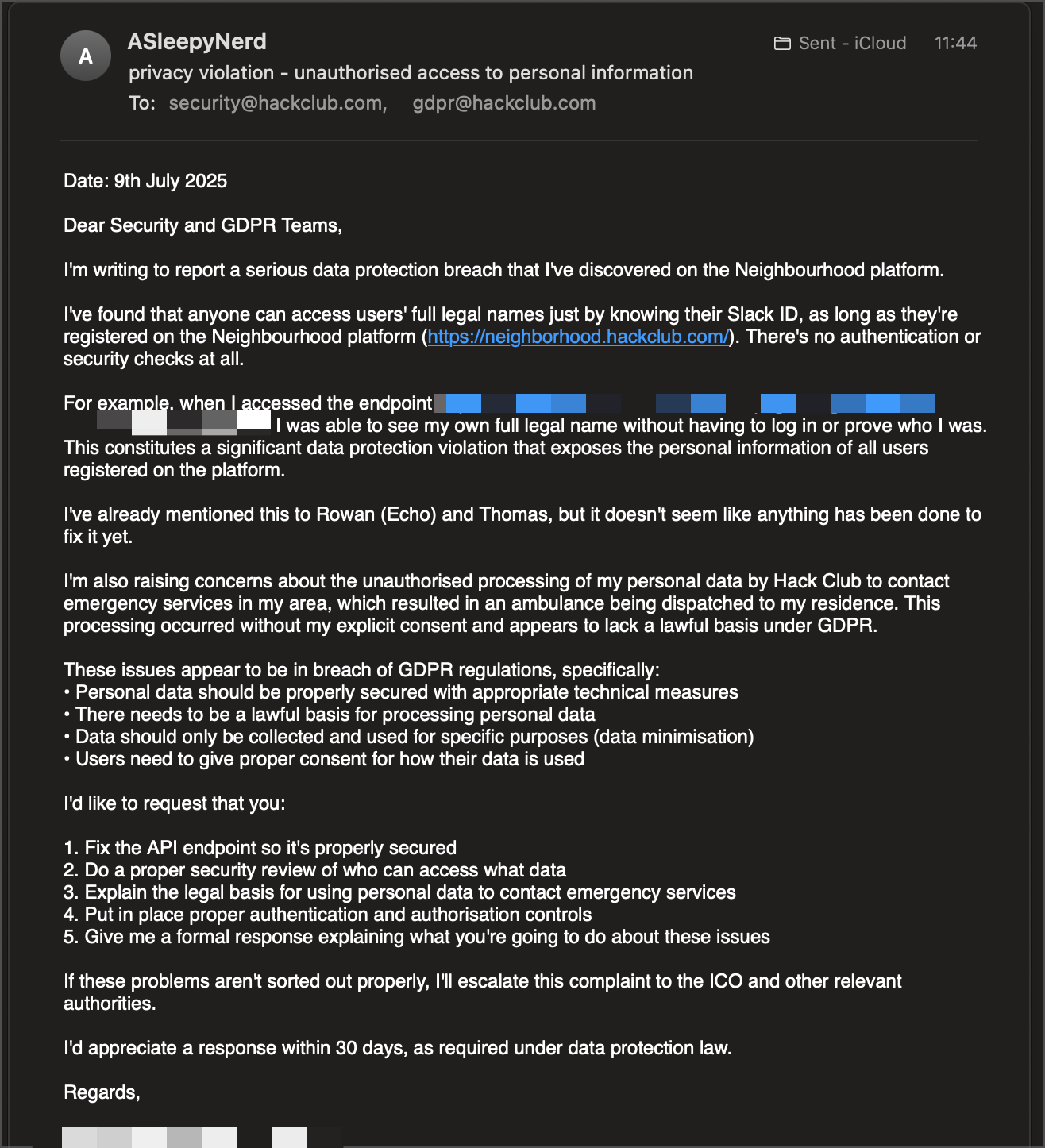

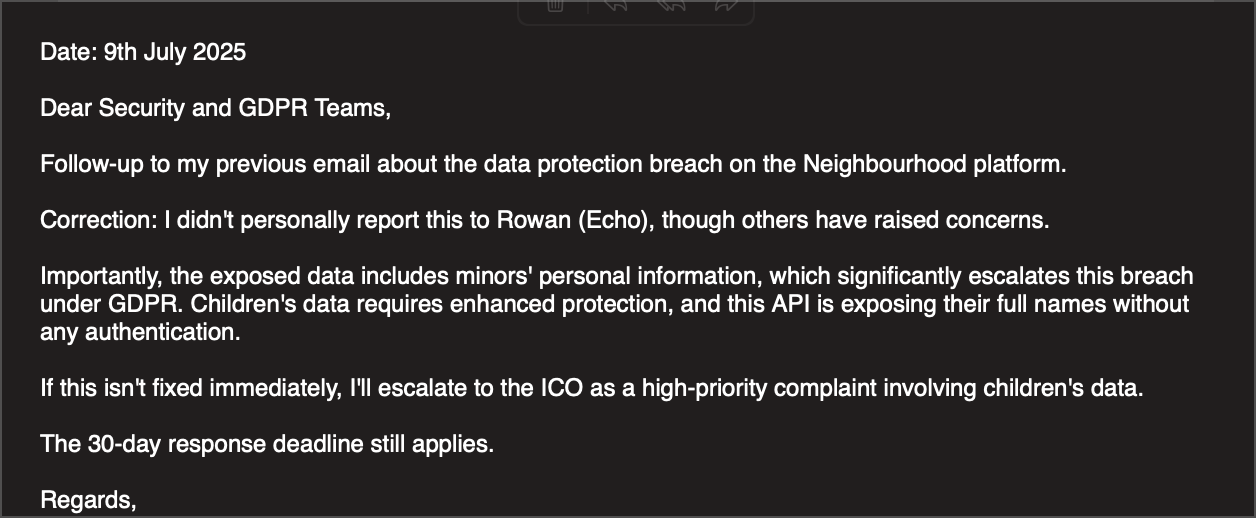

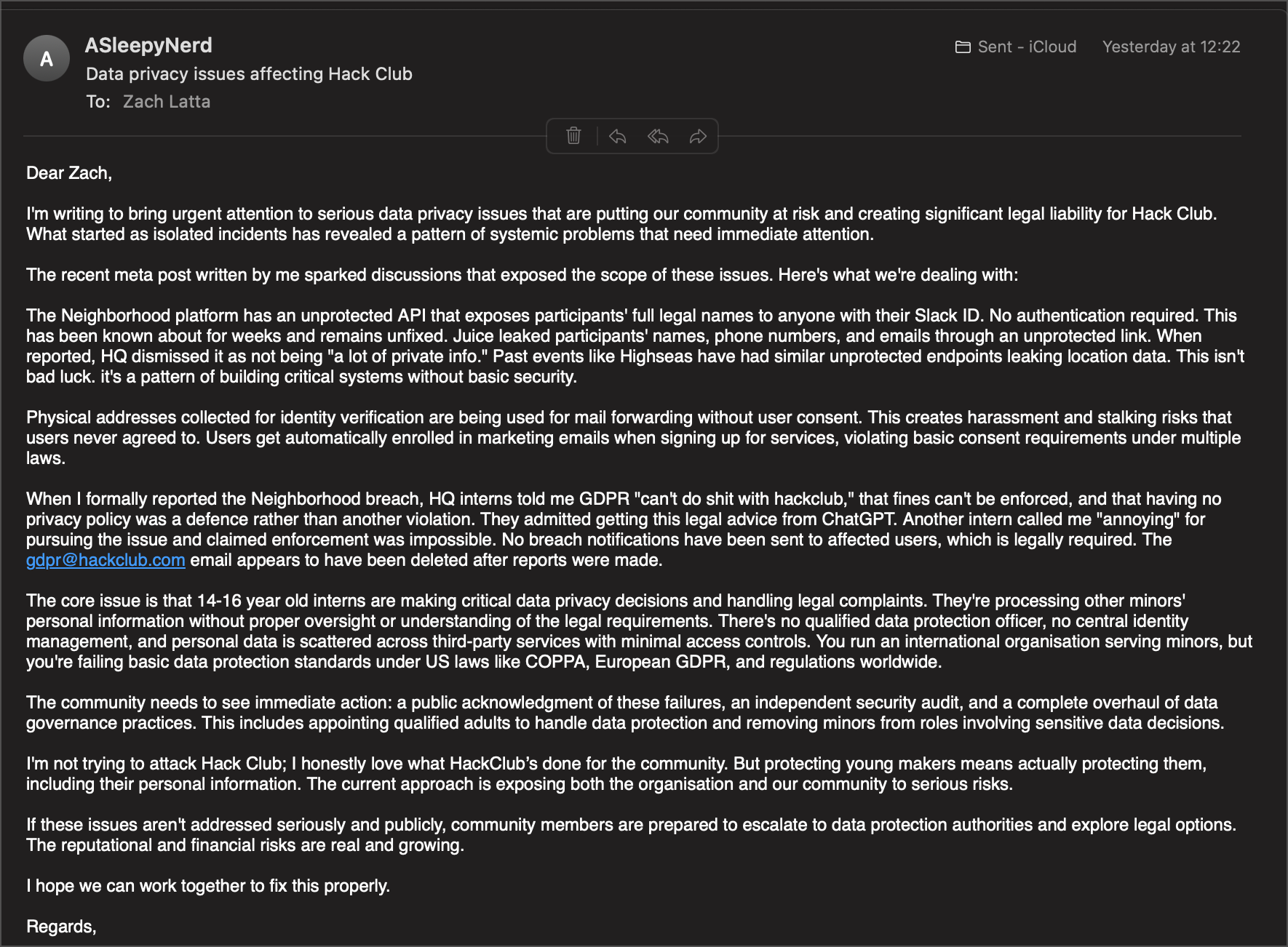

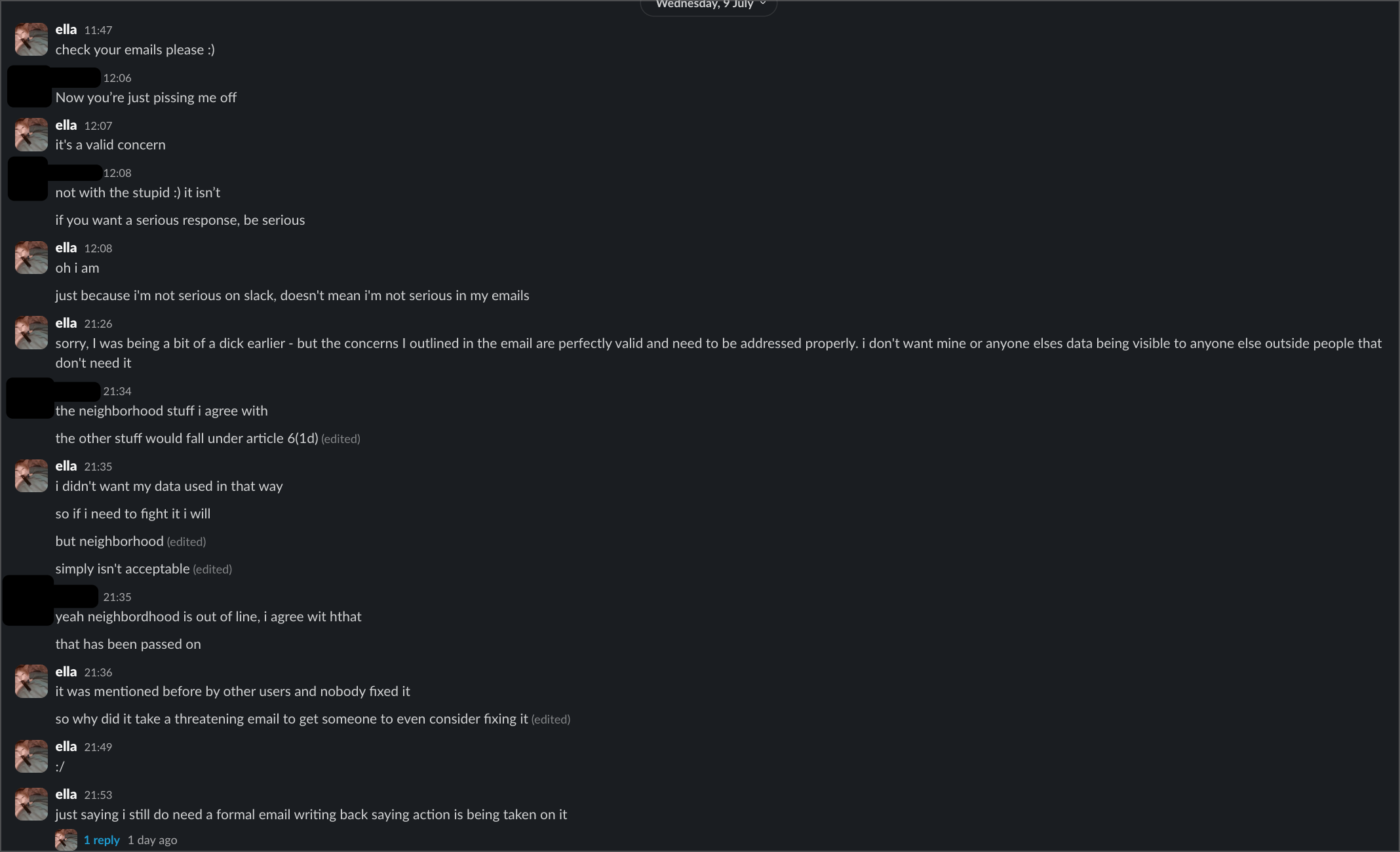

On July 9th, I sent formal data breach notifications to both security@hackclub.com and gdpr@hackclub.com (which you're supposed to do when you find this stuff). I got no response.

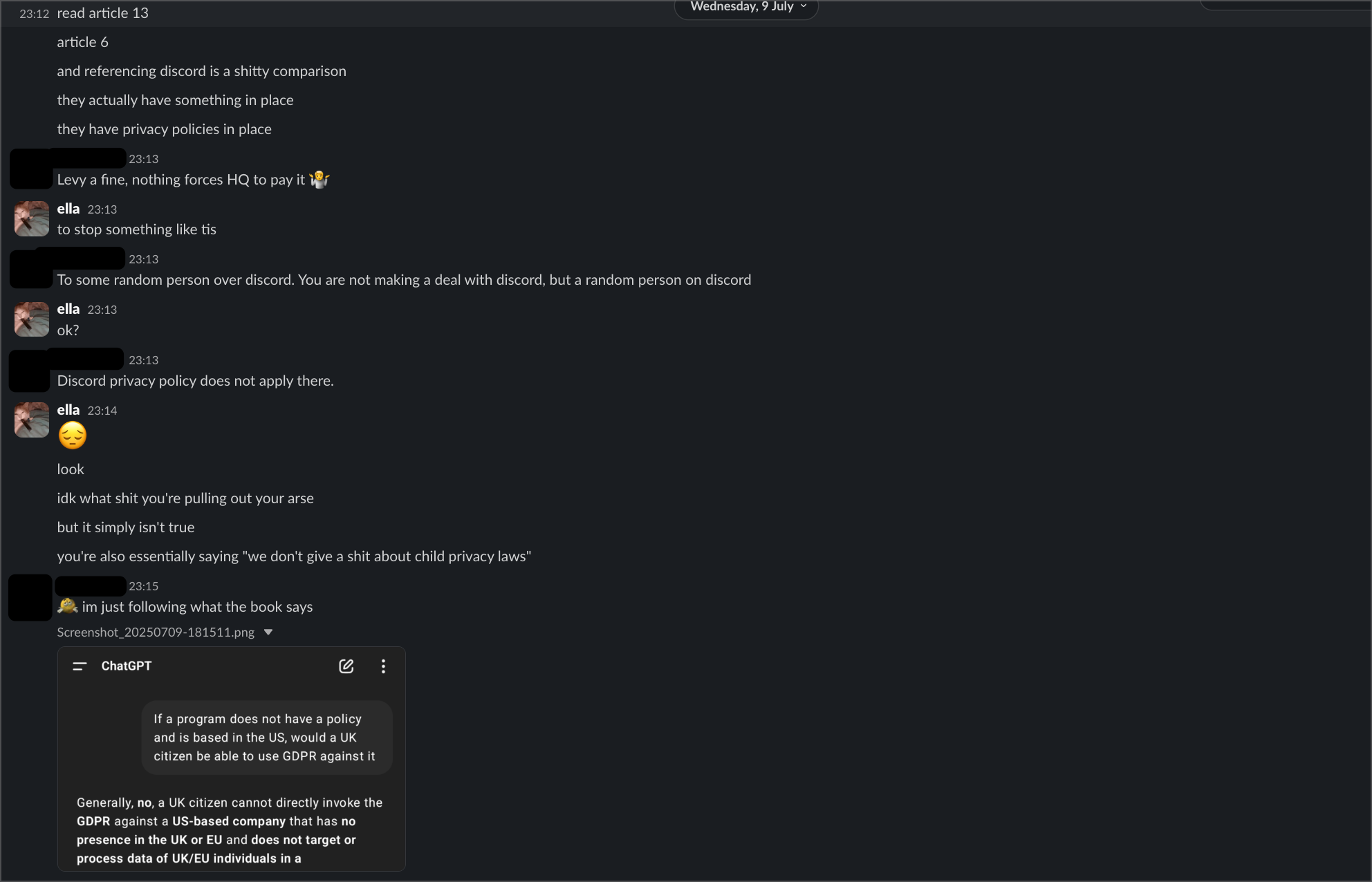

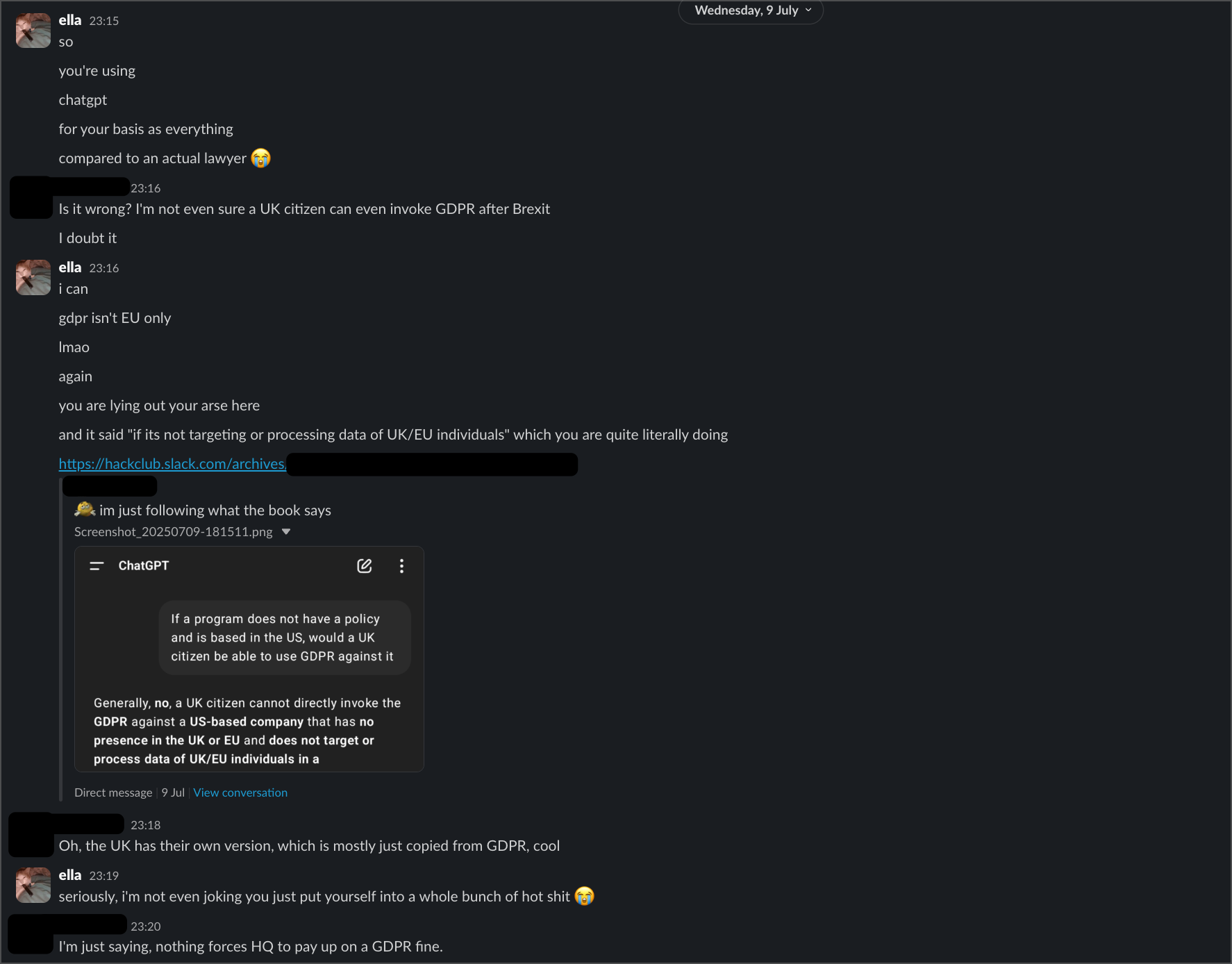

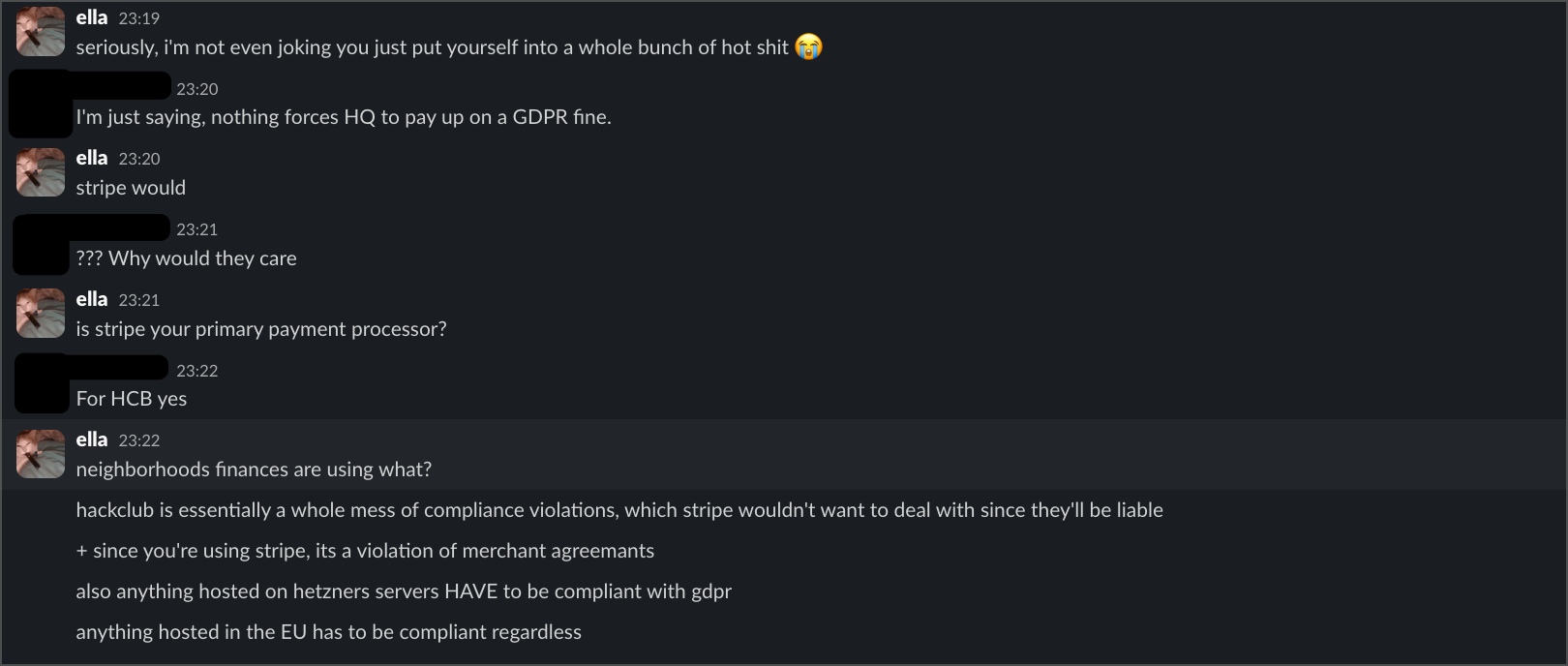

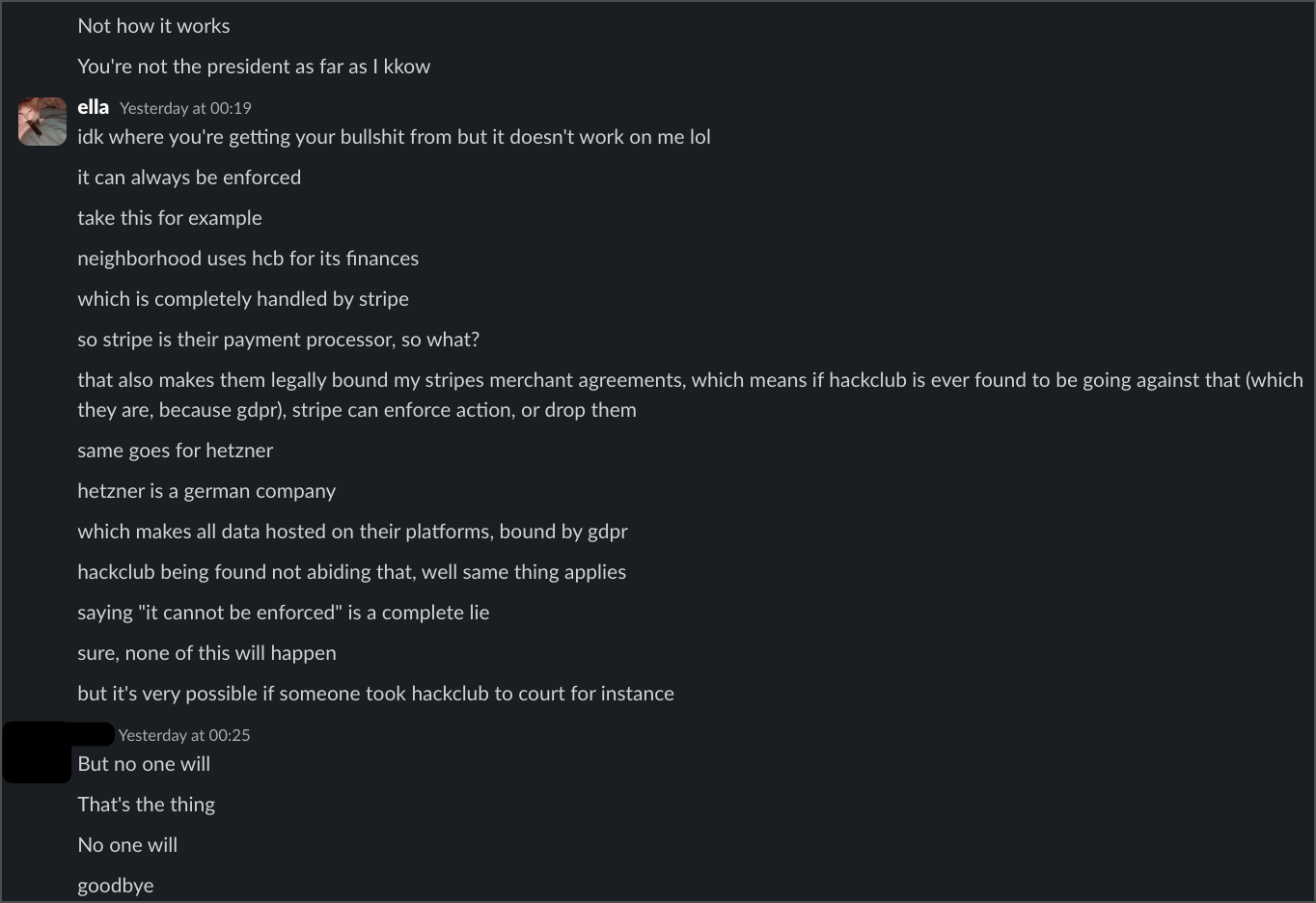

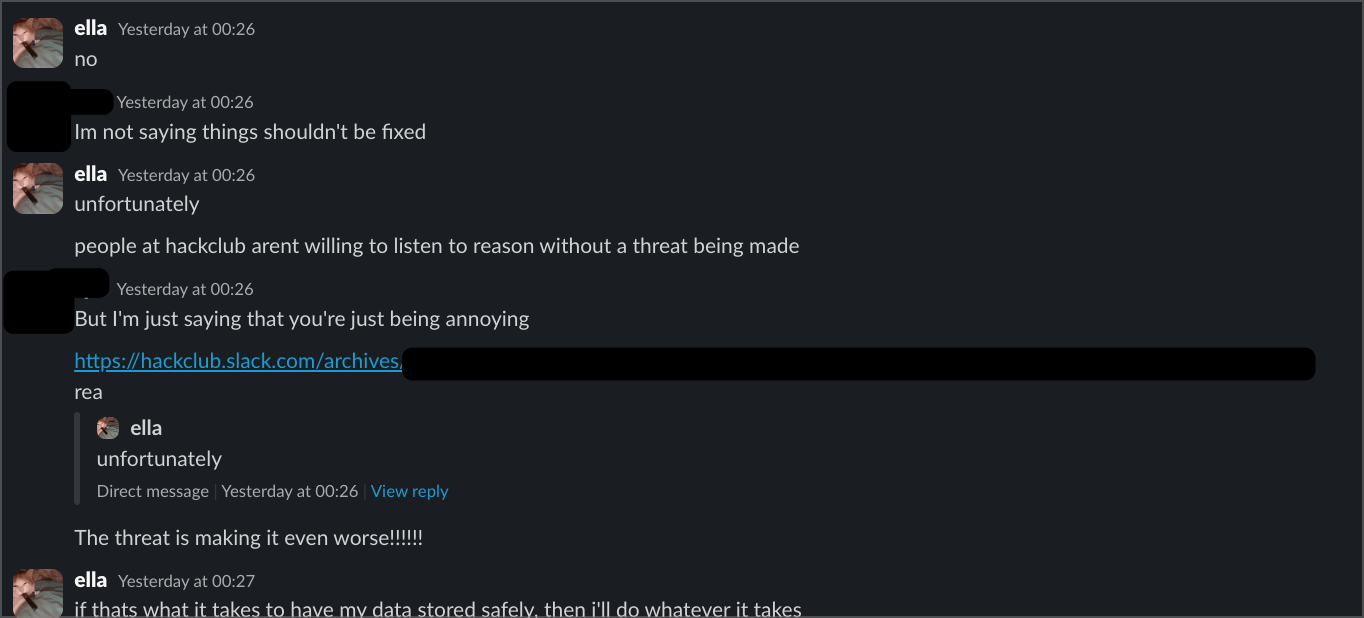

When I tried to get informal responses from HQ staff, their reactions were... wow. The first intern told me that since Hack Club is US-based, they're "not held to GDPR," that if fined "nothing compels us to pay it," and that EU users "void your EU protections" by coming to the US. When I pointed out that having no privacy policy was itself a violation, he admitted getting this legal advice from ChatGPT. Yes, really.

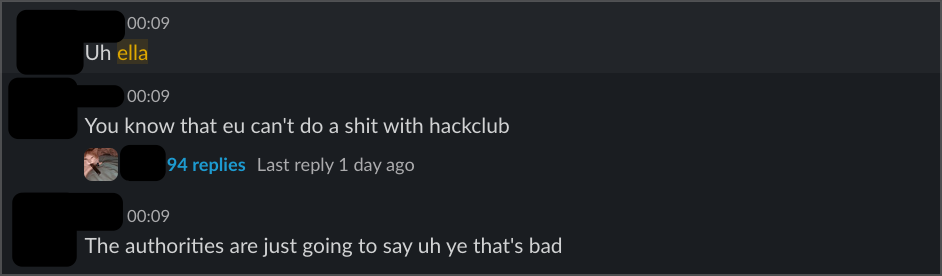

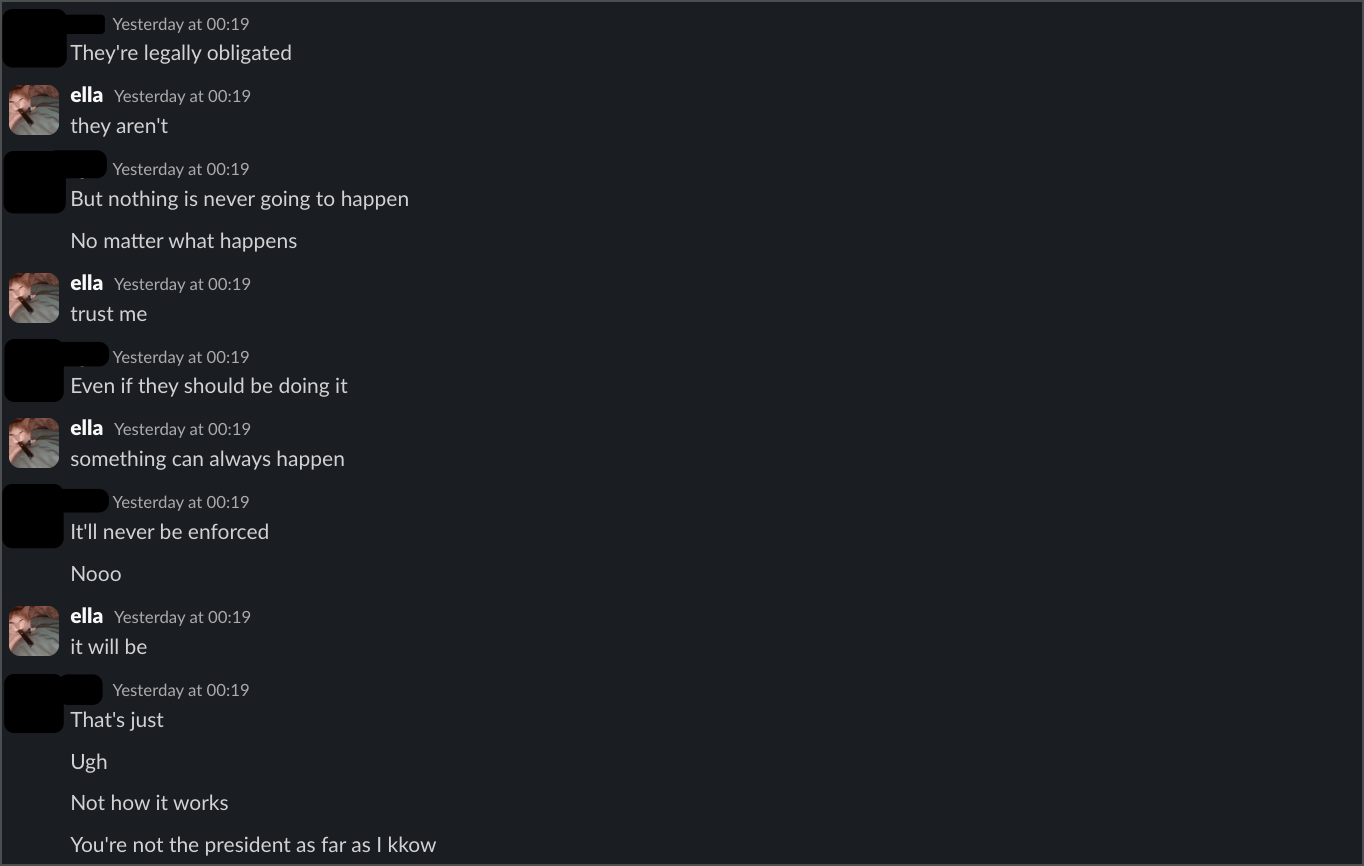

A second intern said basically the same thing, telling me "you know that EU can't do a shit with hackclub" and that enforcement was impossible. When I explained how payment processors and hosting providers create liability, she called me "annoying" for pursuing the issue.

The Real Problem

Here's where it gets really concerning: 14-16 year old interns were making critical data privacy decisions and handling legal complaints. They were processing other minors' personal information without proper oversight or understanding of what they legally need to do.

There's no qualified data protection officer, no central identity management system (that has been deeply integrated into everything as of currently, july 10th, 2025), and personal data scattered across third-party services like Airtable with minimal access controls. For an organisation serving minors, this represents a fundamental failure to meet basic data protection standards under US laws like COPPA, European GDPR, and regulations worldwide.

Even worse, physical addresses collected for identity verification were being used for a mail forwarding service without user consent. Users were automatically enrolled in marketing emails when signing up for services. They basically treat personal data like it's just another convenience tool instead of, you know, sensitive information that needs protecting.

Leadership Response

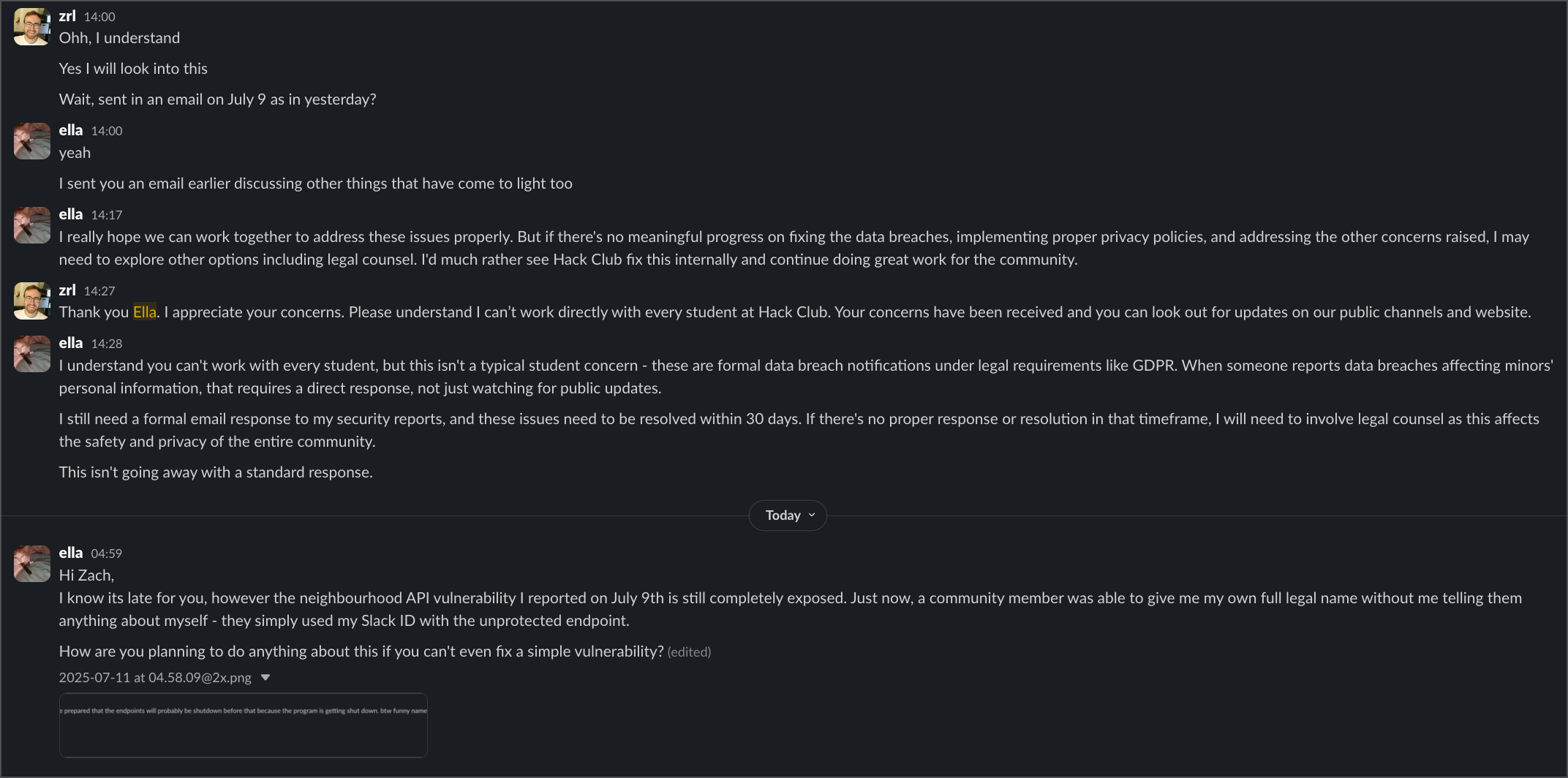

When I brought these concerns directly to founder Zach Latta, his response was dismissive: "Please understand I can't work directly with every student at Hack Club. Your concerns have been received and you can look out for updates on our public channels and website."

This isn't just some student complaint - these are formal data breach notifications that they're legally required to respond to, affecting thousands of users including minors.

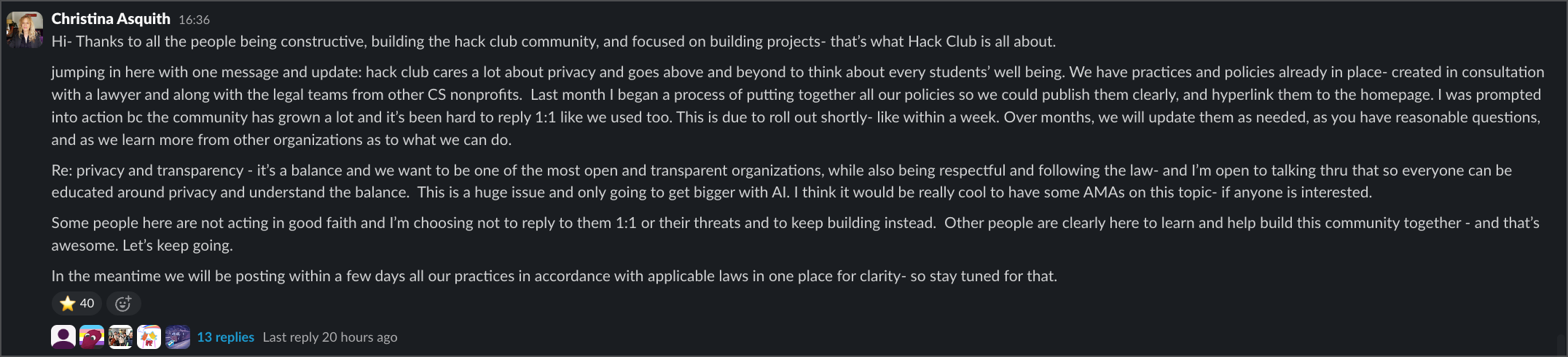

COO Christina Asquith posted an "unofficial statement" on Slack acknowledging they were only now "putting together all our policies so we could publish them clearly." She admitted starting this process only "last month" - supposedly before my complaints - while characterising legitimate privacy concerns as people "not acting in good faith" making "threats." (aka me.)

Even more concerning, after my public meta post, both the gdpr@hackclub.com email and their GDPR data removal request form were taken down. Rather than addressing the issues, they appear to be removing the very channels people would use to report data protection concerns.

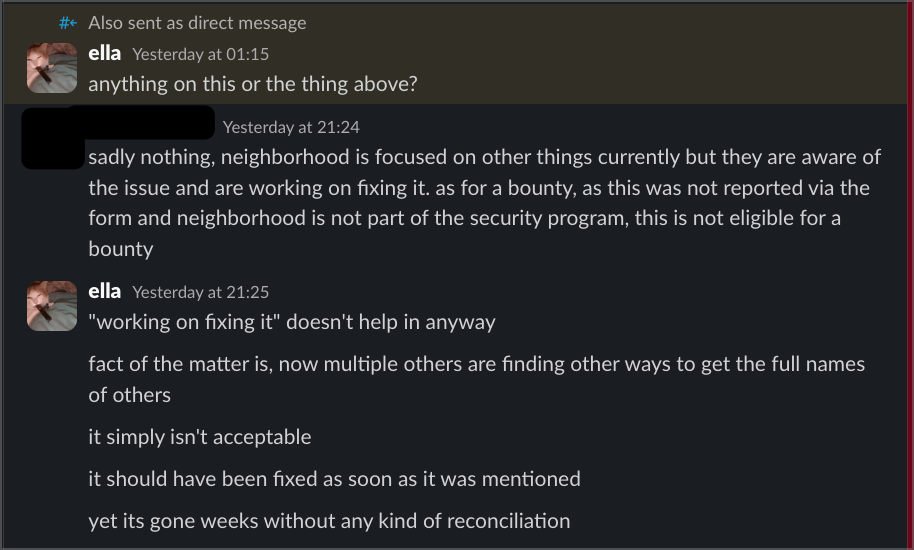

Most recently, when asked about fixing the Neighbourhood vulnerability, one of the interns stated: "sadly nothing, neighborhood is focused on other things currently but they are aware of the issue and are working on fixing it." They admitted knowing about the vulnerability but prioritising other work over protecting user data.

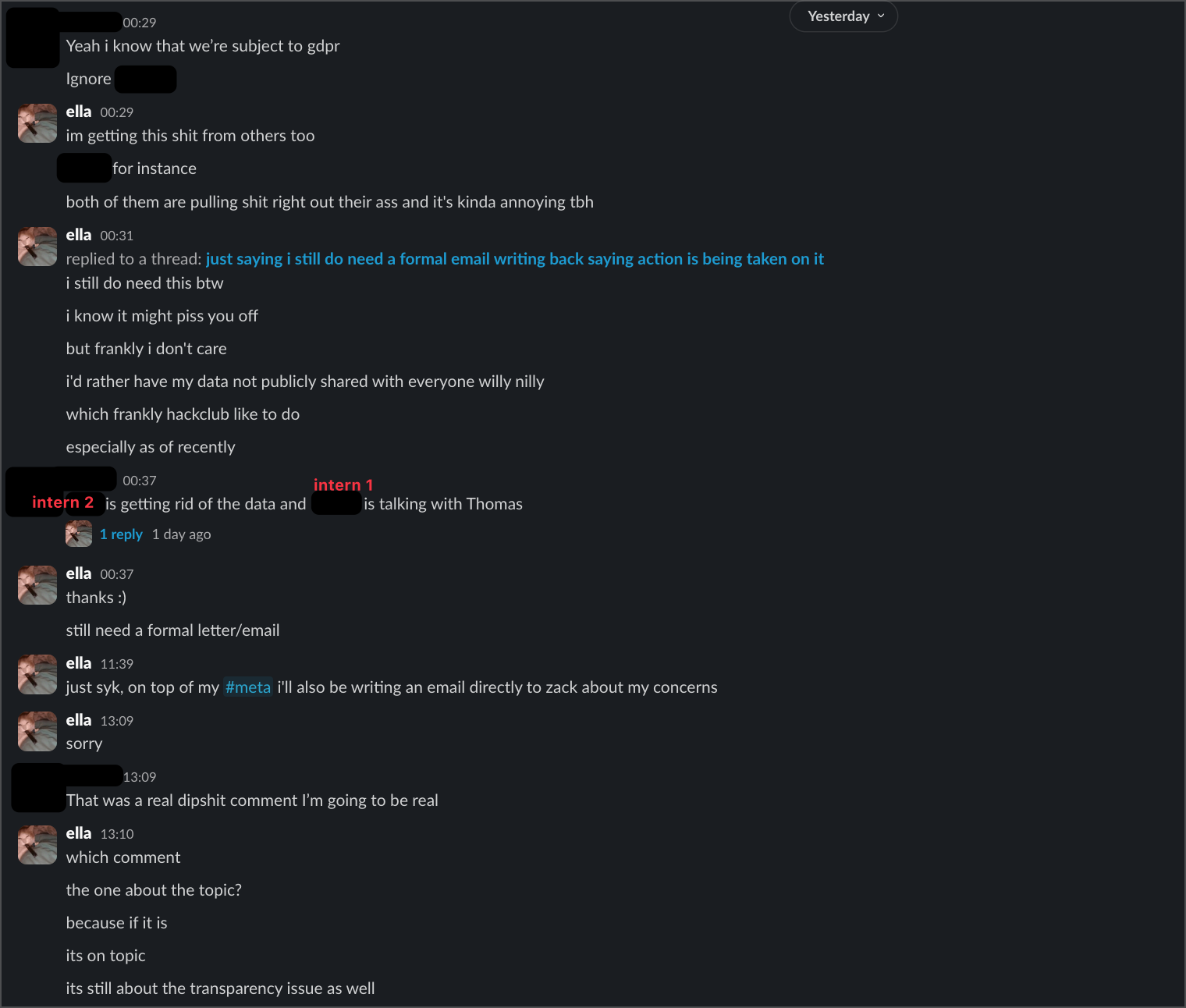

To make matters worse, another intern told me "Intern 2 is getting rid of the data and Intern 1 is talking with Thomas" regarding clearing my personal data from Neighbourhood. That was over 24 hours ago, and my data is still there - another example of promises made but not kept.

Direct Evidence: Developer Admissions

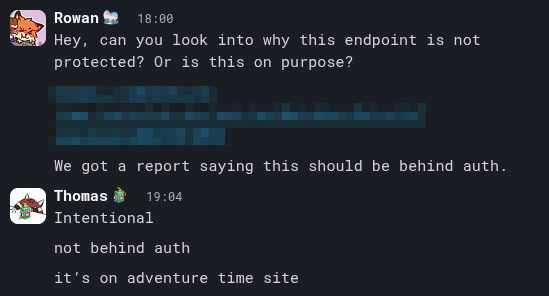

(this was written on the 12th of July 2025, however the evidence was sent to me yesterday at 22:20) If all that wasn't enough, here's the most damning part: I have direct evidence from internal discussions showing that the exposure of personal data via the Neighbourhood API was not an accident, but an intentional decision by the developer, Thomas.

-

When asked why the endpoint was not protected, Thomas replied:

"Intentional. Not behind auth. It’s on adventure time site."

-

When another team member pointed out, "we should not be exposing this...", Thomas simply replied:

"Why?"

-

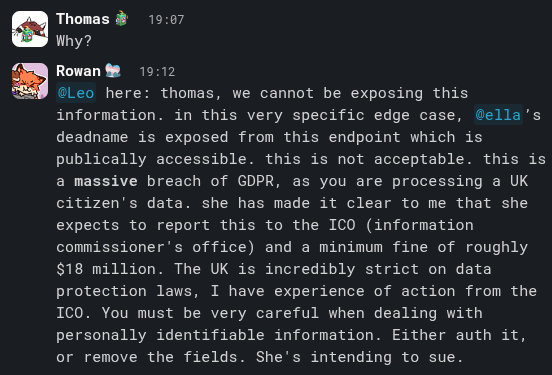

Rowan, another team member, explicitly warned Thomas that this was a massive GDPR breach, that my deadname was being exposed, and that this could result in a major fine from the UK ICO. Despite this, Thomas did not take immediate action to secure the endpoint.

This shows the exposure of personal data was a conscious choice, not an oversight. The developer was explicitly warned about the legal and ethical risks, including GDPR and UK law, and still did not act. This is willful negligence, not just ignorance or lack of resources.

The Community Pushback

When I made this public in the meta channel, the responses were... interesting. Some community members accused me of "knee-jerk outrage" and making "perfectionist demands" for wanting basic API authentication. Others said I was "scaring away the very people trying to keep things running" and that public criticism was "poisoning the well."

But here's the thing - I'm not asking for "enterprise-grade security." I'm asking for basic authentication on an API endpoint. That's not perfectionism, that's Security 101. When someone can literally give me my own full name just by plugging my Slack ID into a URL, that's not a complex technical challenge - it's a fundamental failure.

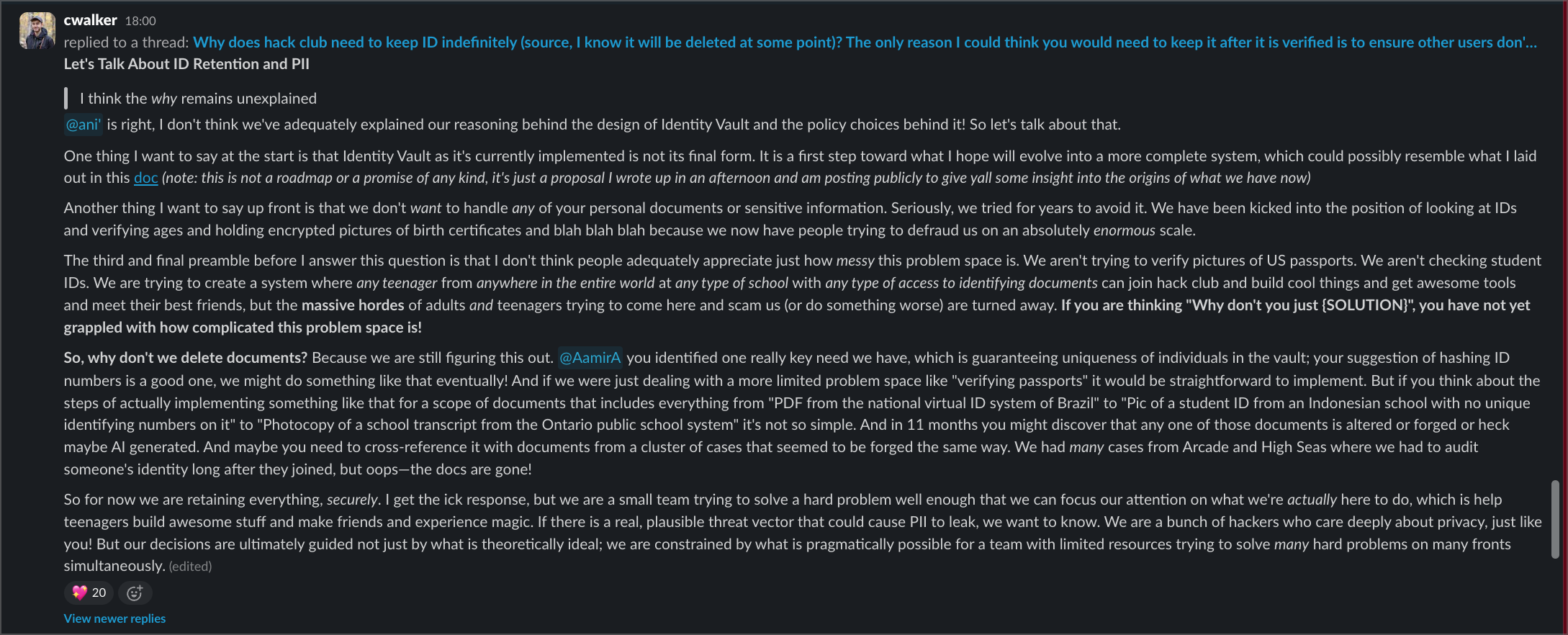

The deflection was telling too. When HQ finally responded publicly, they wrote a long explanation about Identity Vault and document verification complexity. But that completely missed the point - I wasn't complaining about document retention policies, I was reporting an API that exposes names without any authentication whatsoever. Classic deflection: address a different, more defensible problem instead of the actual vulnerability.

The "Official" Response About GDPR Email

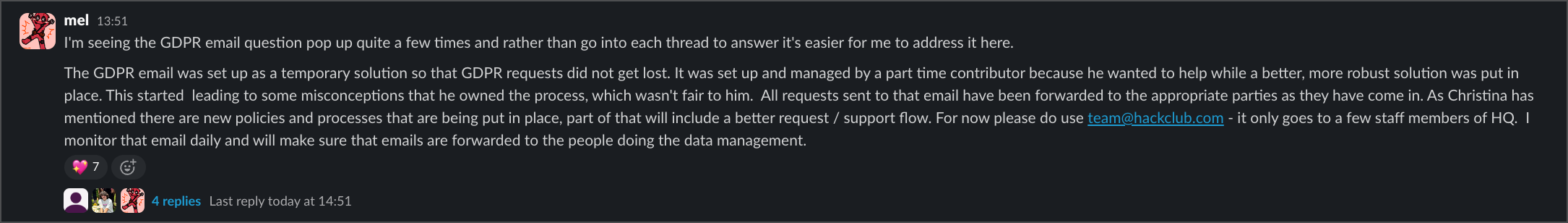

After days a bit, Melanie Smith (Director of Operations at HCB) finally addressed why the GDPR email disappeared. According to her, it was managed by a "part time contributor" as a "temporary solution" and all requests were supposedly "forwarded to appropriate parties."

This raises more questions than it answers. If requests were being forwarded, why did my July 9th formal breach notification get zero response? Having part-time volunteers handle GDPR requests is itself a compliance issue. And the timing is suspicious - the email disappeared right after my public post, but now they're framing it as a planned transition.

She directed people to use team@hackclub.com instead, saying she monitors it daily. But if that's true, why hasn't she forwarded my security@ and gdpr@ emails that were sent days ago?

The Legal Violations

Based on my research and expert consultation, here are the specific laws Hack Club appears to have violated:

GDPR Violations (European users)

- Article 5 - Data protection principles (inadequate security, no lawful basis for processing)

- Article 6 - Lawfulness of processing (no legal basis for most data collection)

- Article 8 - Child consent (processing data from under-16s without parental consent)

- Article 12 - Transparent communication (no response to data subject requests within required timeframes)

- Article 13 - Information provision (no privacy policies before data collection)

- Article 25 - Data protection by design (systems built without basic security measures)

- Article 28 - Third-party processing (improper data sharing via Airtable)

- Article 32 - Security of processing (unprotected API endpoints exposing personal data)

- Article 33 - Breach notification (failed to notify supervisory authorities within 72 hours)

COPPA Violations (US children under 13)

- Collecting personal information from children without verifiable parental consent

- No privacy policy explaining data practices before collection

- Sharing personal information with third parties without proper disclosure

- Using personal information for purposes beyond the original collection

POPIA Violations (South African users)

- Section 9 - Processing limitation (processing without lawful basis)

- Section 14 - Data subject participation (not responding to access requests)

- Section 19 - Security safeguards (inadequate technical and organizational measures)

What This Actually Means

Look, this isn't about being difficult or trying to attack Hack Club. I genuinely believe in their mission of supporting young makers. But protecting young makers means actually protecting them - including their personal information.

The pattern here is pretty clear:

- Multiple data breaches across programmes

- Systematic misuse of personal data

- Legally ignorant responses from staff

- Dismissive leadership when issues are reported

- Admissions that required policies didn't exist

- Continued delays in addressing known vulnerabilities

What Needs to Happen

Organisations serving minors have heightened responsibilities for data protection. When they collect personal information from children and expose it through insecure systems, when they dismiss legal concerns with ChatGPT advice, when they admit to lacking basic privacy policies required by law - that demands accountability.

The community deserves transparency, acknowledgement of these failures, and concrete action to fix them. This includes implementing proper data governance, appointing qualified adults to handle privacy decisions, and removing minors from roles involving sensitive data management.

If these issues aren't addressed seriously and promptly, the community should know that regulatory authorities including the ICO, FTC, and others have mechanisms to ensure compliance - even for organisations that believe they're above the law.

The mission of supporting young makers is brilliant. But it can't be fulfilled while simultaneously failing to protect them.

This post documents ongoing data privacy concerns at Hack Club based on publicly available information and documented communications with organisation staff.

for those who would like to make reports to government bodies, you are more than welcome to use anything on this page to make your case.

it's seriously a shame that we can't have nice things. one of the hackclub interns, (intern 1 to be specific) decided to bot the "i agree" and "i disagree" buttons using tor exit nodes, so i had to remove all the votes for it, sorry